Let’s stop separating AI, GenAI, and data. Looking around, I see the same scene replayed. In one corner, a “GenAI” team talking prompts, copilots, and prototypes. A little further, a “data” team talking quality, lineage, governance, GDPR. Between them, a PowerPoint bridge that looks like a ceasefire. At the end of the chain, an executive committee asking where the value is.

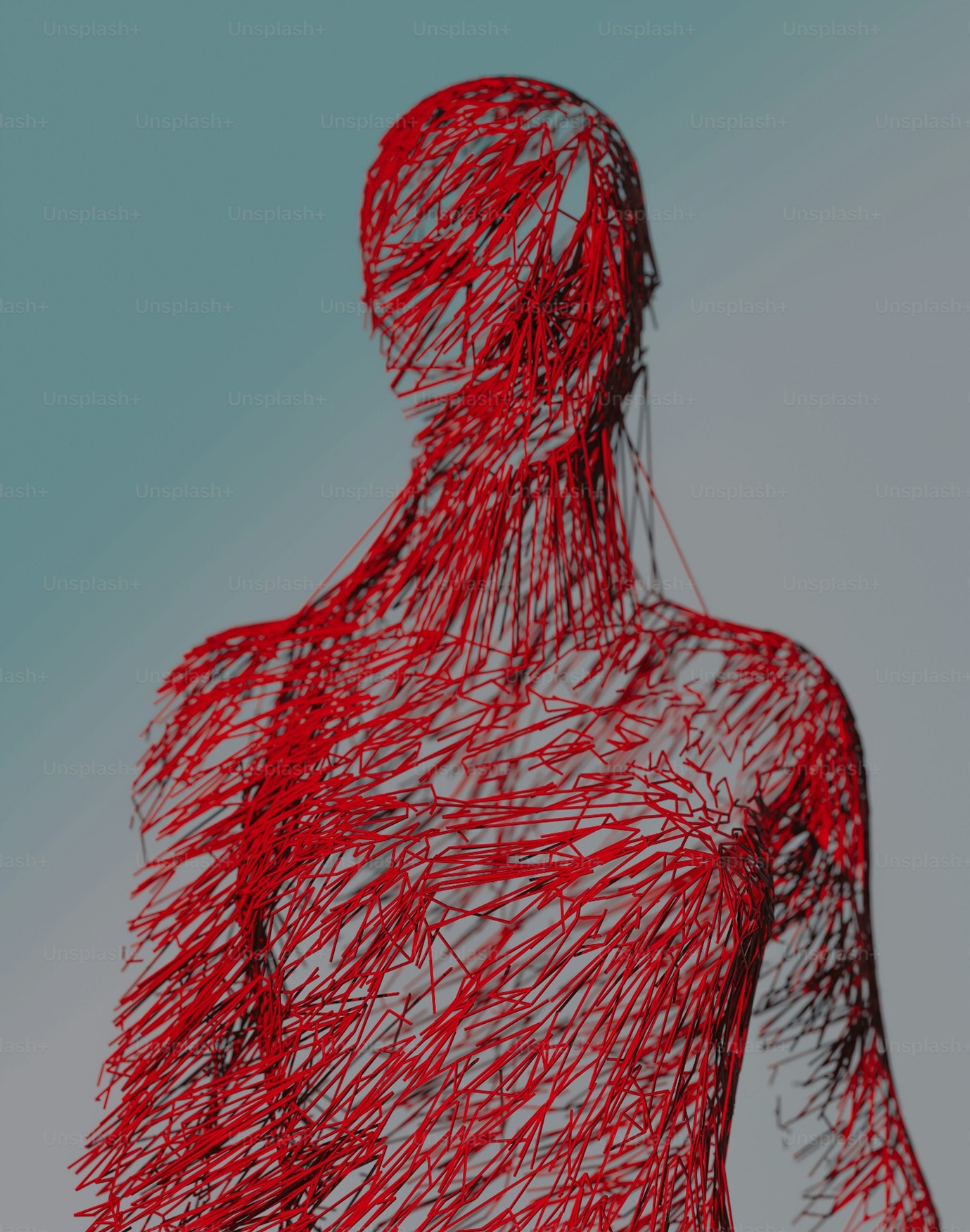

The truth is simple. Data is the bloodstream. Models are the brain. Analytics is the working memory. If the blood is poor, dirty, or siloed, the brain misfires and the memory makes up stories. You can add NVIDIA GPUs, new prompts, another POC. If the flow does not circulate, you mostly manufacture frustration.

The trap comes from our history. For a decade we treated data like a support function and AI like a lab experiment. GenAI accelerated everyone but also installed a persistent illusion. We think a powerful model compensates for fragile data. We think a clever prompt fixes a broken schema. We think a pretty assistant will cover for weak business rules. These are mirages. A model learns what it is given, reproduces what it sees, and generalizes the biases we let slip through. So the question is not which AI to deploy, but which system we will build where data, analytics, and models live together, at one cadence, with one logic of industrialization.

Where it works, the pattern is consistent. Data is treated as a product with an accountable owner, a quality contract, and a shared vocabulary. The platform is not a pile of lakes and silos. It connects a lakehouse, a feature store, and a vector index under a single governance. Models of all kinds train and are observed on that same foundation. Most importantly, usage signals flow back. Every question asked to a copilot, every user correction, every process exception is captured, labeled, and reinjected to improve data, semantics, features, and models. We are not doing “AI projects.” We are running a living loop.

This changes how decisions are made. We stop funding POCs out of curiosity and data lakes out of “prudence.” We fund use cases tied to concrete data products and measured models. The conversation moves from “can you generate this report faster” to “how much cognitive time are we returning to teams and how many errors are we removing from the process.” The right dashboards do not celebrate assistant adoption. They show task precision, reuse of data products, the lead time from idea to model to observable value, and the manual effort avoided. When metrics align to the real mechanics, internal politics lose their magic. The results speak.

Discipline is required. Metadata becomes critical. Lineage is a daily reflex. Usage rights and content sensitivity are guardrails at the heart of user journeys. Yes, this sometimes slows apparent velocity. It is the price of sustainable speed. An AI that hallucinates less is an organization that corrects less. Well-described data is a model that learns better. A shared semantics is fewer endless alignment meetings. Real speed comes from clarity.

People ask where to start. Start with governance, but not the kind that lives in committees and approvals. A living governance operated in the platform, close to usage, that makes visible data contracts, glossaries, training sets, validated prompts, identified risks, and recorded decisions. Then pick a handful of use cases that truly cross the enterprise, not demo candy. Ideally use cases that can improve existing processes as it makes value easier to measure. Run a cycle: data contract, training, deployment, observation, correction. And accept that the first turn of the loop will expose what you preferred not to see. Missing quality. Unclear usage rights. A poorly thought process. Good. What matters is having built the cycle that can correct it.

I do not oppose engineering and strategy. I refuse to separate them. A CIO must hold both. Draw the architecture that avoids technical dead ends. Install the operating model that avoids political ones. Tell a story to your peers that connects business, data, and AI without folklore. In that story, data is not a cost but a capability. GenAI is not a magic wand but an accelerator that reveals your strengths and weaknesses. Analytics is not a museum of charts but the active memory of decision.

If you already have three separate programs, do not “coordinate” them, merge them. One strategy, one backlog, one shared platform, and value metrics that cut through silos. If you have not started, start small but start right. One meaningful use case. One data flow mastered end to end. One model that actually learns from usage. Then repeat. The loop will carry you further than any five-year plan.

We do not need “stronger AI” if the system stays fractured. We need a living system where data circulates, models learn, teams understand, and value is visible. The rest follows with time, rigor, and calm. Stop splitting AI, GenAI, and data. Connect the bloodstream to the brain, keep the loop alive, and measure what matters.

What’s the core idea of “connect the bloodstream to the brain”?

Data is the bloodstream. Models are the brain. Analytics is working memory. If data quality, access, and semantics are fragmented, GenAI will amplify the mess, not fix it.

Why is separating “GenAI” teams and “data” teams a problem?

Because you end up with prompts and prototypes on one side, governance and quality on the other, and a PowerPoint “bridge” in between. Value dies in the gap: models can’t be reliably fed, deployed, observed, or improved at enterprise scale.

Can a powerful model compensate for weak data?

Not sustainably. A model learns what it’s given. If the underlying data is incomplete, biased, poorly defined, or siloed, the model will misfire or confidently fabricate. More GPUs and better prompts won’t repair broken schemas and business rules.

What does “a living loop” mean in practical terms?

Usage signals flow back into the system. Every copilot question, correction, exception, and feedback becomes input to improve semantics, features, data products, and models. You’re not shipping “AI projects.” You’re operating a learning system.

What does a “single foundation” look like architecturally?

A coherent platform where a lakehouse (data), feature store (ML-ready signals), and vector index (retrieval/knowledge) operate under one governance and one operating cadence, with shared observability and controls.

What is a “data product” in this context?

A data asset treated like a product: clear owner, defined consumers, quality contract, documented semantics, and measurable SLAs. Not a vague “dataset in a lake.”

What metrics should leadership track to see real value?

Not “assistant adoption” first. Track: task precision and error rates, reuse of data products, lead time from idea → deployed model → observable outcome, manual effort avoided, and process exceptions reduced.

Why does governance matter so much for GenAI?

Because GenAI touches sensitive content, rights, and traceability. Without metadata, lineage, and usage rights embedded in the workflow, you can’t scale safely or explain outcomes when things go wrong.

Doesn’t this discipline slow you down?

It slows apparent velocity and increases real speed. Less hallucination means less correction. Better-described data means better learning. Shared semantics means fewer alignment meetings and fewer rework cycles.

Where should a CIO start—platform, use cases, or governance?

Start with governance, but make it living and platform-operated: visible data contracts, glossaries, training sets, validated prompts, risks, and decisions. Then choose a few cross-enterprise use cases (not demo candy) and run the full loop.

What are “good” first use cases?

Ones that cross silos and improve existing processes, so value can be measured quickly: service workflows, procurement, finance ops, supply planning, customer support, quality/compliance—anything with clear cycle time, error cost, and auditability.

What should you do if you already have three separate programs (AI, GenAI, data)?

Don’t “coordinate” them. Merge them: one strategy, one shared backlog, one platform, one set of value metrics. Coordination preserves politics; integration removes them.

What’s the biggest trap organizations fall into right now?

Funding POCs out of curiosity and data programs out of prudence, without connecting them. You get motion everywhere and learning nowhere.

What’s the one-sentence takeaway for executives?

You don’t need “stronger AI.” You need a system where data circulates, models learn from usage, and value is observable—end to end.